Comparing Uncertainty Methods

Late 2024 — Deep Ensembles vs MC Dropout vs Deterministic

About

A comprehensive comparison of uncertainty estimation methods using Bayesian neural networks (LeNet5 with dropout) on Dirty-MNIST, MNIST, and Fashion-MNIST datasets. Covers classification with rejection, out-of-distribution detection, and density-based uncertainty.

View on GitHub →Methods Compared

Deep Ensembles

Multiple independently trained models averaged for predictions

MC Dropout

Dropout at inference time for Bayesian approximation

Deterministic

Standard neural network (baseline)

Model Training Results

Models trained on Dirty-MNIST, evaluated on MNIST test set.

| Method | Accuracy | NLL |

|---|---|---|

| Deep Ensembles | 98.9% | 0.0367 |

| MC Dropout | 99.0% | 0.04 |

| Deterministic | 98.8% | 0.067 |

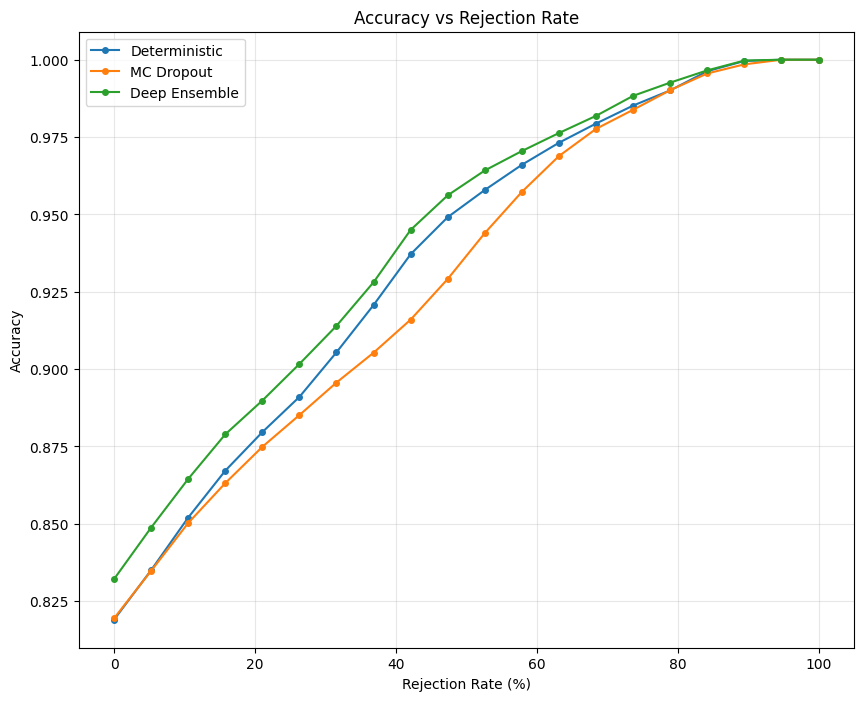

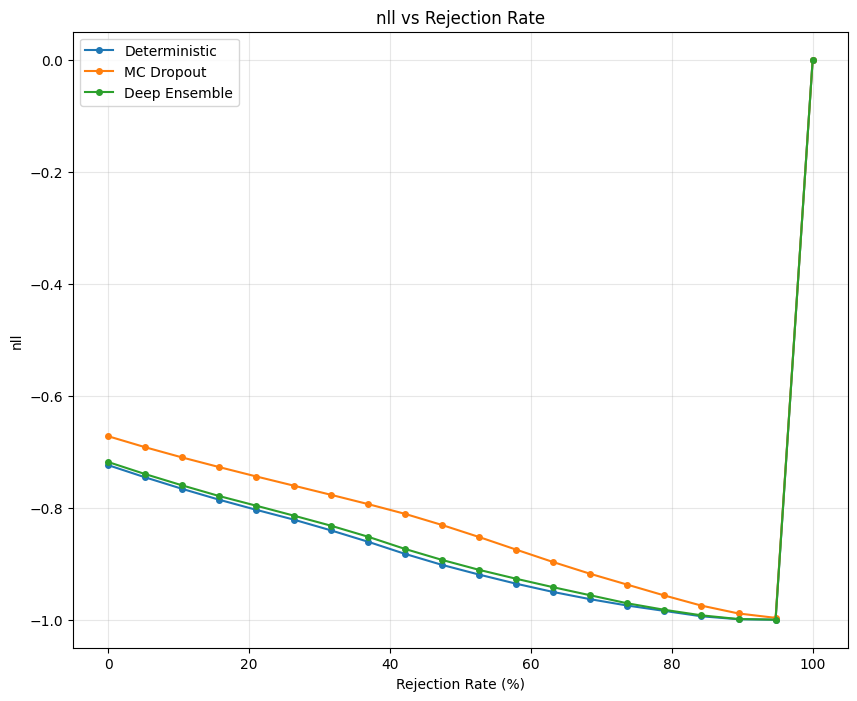

Classification with Rejection

Ranking predictions by uncertainty and rejecting the least confident ones. Higher rejection rates → only confident predictions retained → higher accuracy.

Accuracy vs Rejection Rate

NLL vs Rejection Rate

Observations:

- • Deep Ensemble achieves highest accuracy due to prediction diversity

- • MC Dropout leverages stochasticity but slightly trails Deep Ensemble

- • At high rejection rates (>70%), all methods converge

- • Deep Ensemble shows best calibration (lowest NLL)

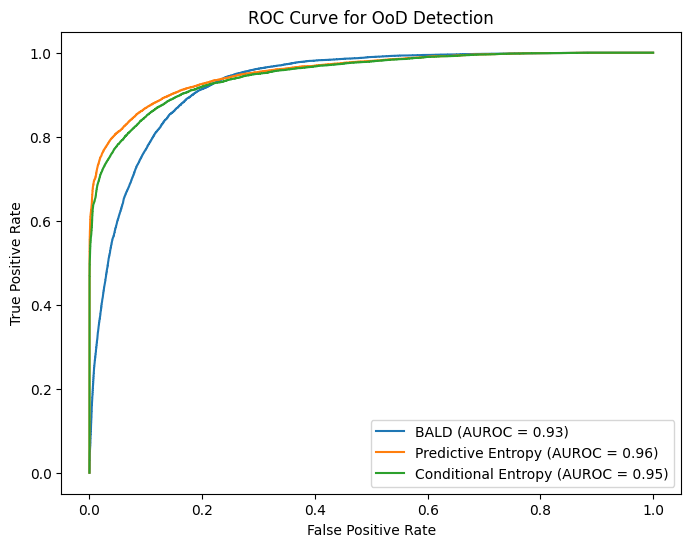

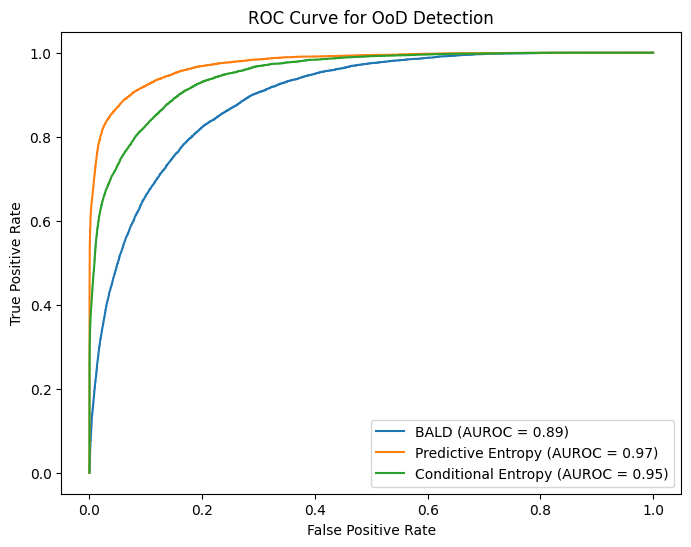

Out-of-Distribution Detection

Detecting whether inputs come from the training distribution (Dirty-MNIST) or a different distribution (Fashion-MNIST).

Deep Ensemble

AUROC: BALD 0.89 | Pred. Entropy 0.97 | Cond. Entropy 0.95

MC Dropout

AUROC: BALD 0.93 | Pred. Entropy 0.96 | Cond. Entropy 0.95

Key Finding: Predictive Entropy achieves highest AUROC for OoD detection. MC Dropout captures epistemic uncertainty (BALD) more effectively than Deep Ensembles in this scenario.

Key Takeaways

- ✅ Deep Ensembles win for accuracy and calibration in rejection scenarios

- ✅ Predictive Entropy is most reliable for OoD detection (AUROC 0.96-0.97)

- ✅ MC Dropout is a practical alternative when training multiple models is expensive

- ✅ Rejection-based classification useful for high-stakes applications (medical, autonomous)