🧠

AIMS Coursework

Neural Network from Scratch

Late 2024 — Backpropagation, XOR Problem, Visualization

From Scratch

Backpropagation

Gradient Descent

XOR

About

Step-by-step implementation of a neural network from scratch using only Python and NumPy. Covers the theoretical background of backpropagation, core functions (weighted sum, activation, cross-entropy loss), and training with gradient descent.

View on GitHub →Implementation Sections

1. Theory

Theoretical background of backpropagation

2. Data Prep

Loading, splitting signal/background, NumPy conversion

3. Core Functions

Weighted sum, activation, cross-entropy, derivatives

4. Feedforward

Computing activations and predictions

5. Training

Gradient descent to minimize cost

6. Results

Cost evolution & decision boundary visualization

Results

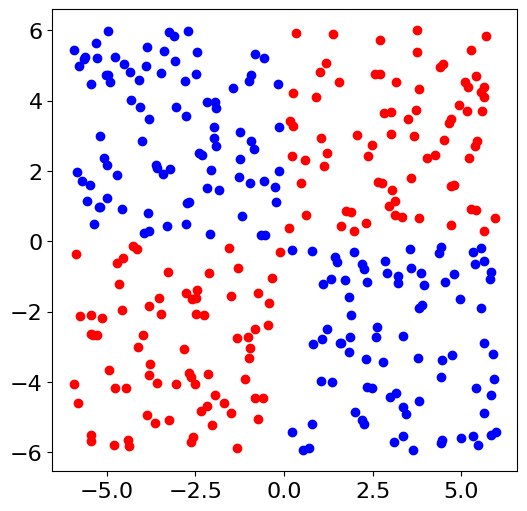

Problem Setup

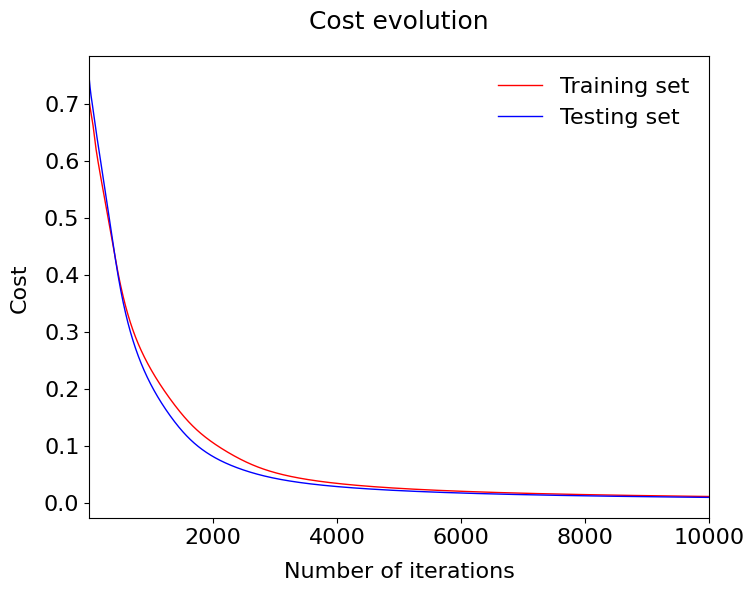

Cost Evolution

Cost decreasing during training

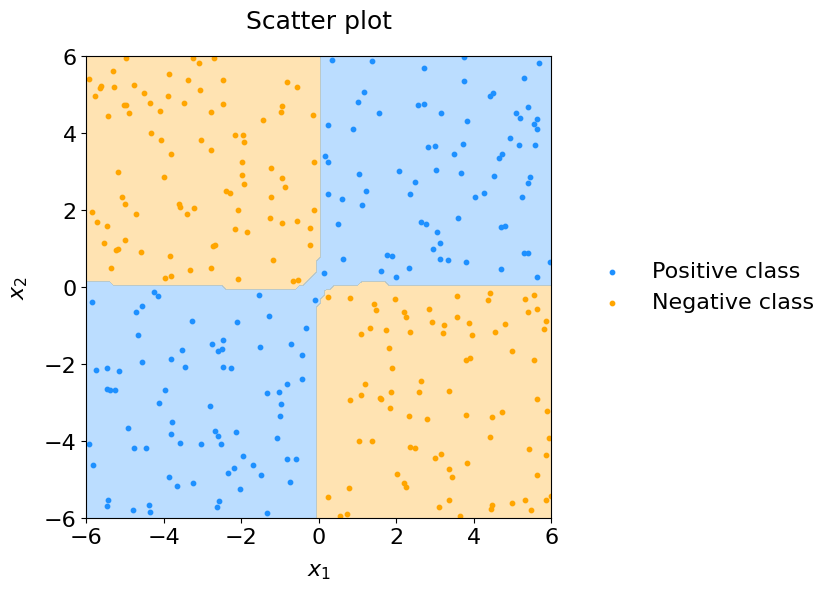

Decision Boundary

Separation of positive/negative classes

Key Takeaways

- ✅ Understanding fundamentals — building from scratch reveals the math

- ✅ Backpropagation is just chain rule applied to compute gradients

- ✅ Gradient descent iteratively minimizes cost to find optimal weights

- ✅ Visualization helps understand what the network learns